The Project

Beats Exposed is an interactive performance experience that breaks down the barrier between audience and performer. By exposing the body’s vital signs, the performer invites the audience to see beyond the polished act and into the extreme physical and personal effort.

Beats Exposed is built to be used in performance on, or off, stage. It is lightweight and able to run in a variety of settings.

Our current iteration of the project is performed with an aerialist. It exposes the exertion in an artform that is extremely demanding, yet typically meant to appear effortless.

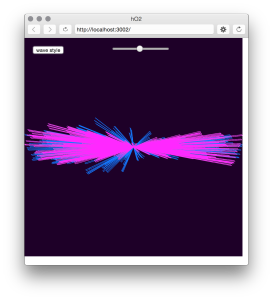

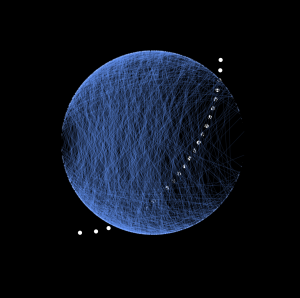

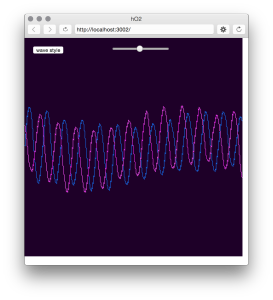

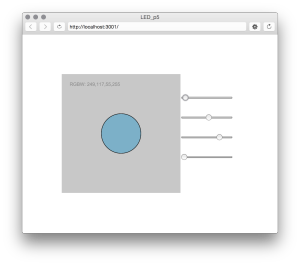

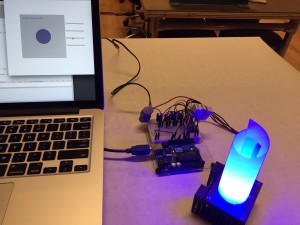

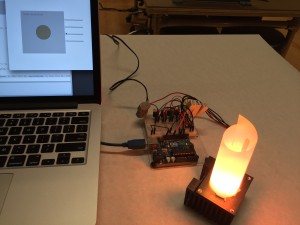

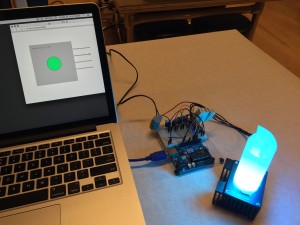

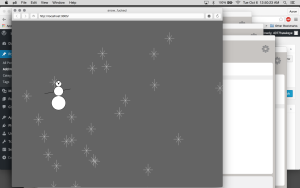

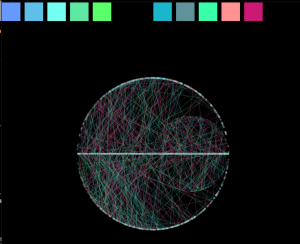

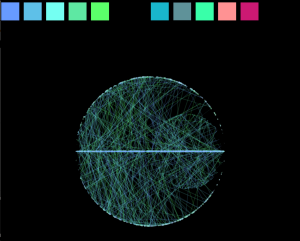

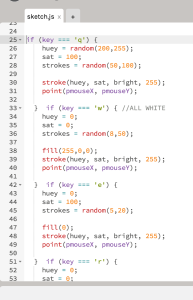

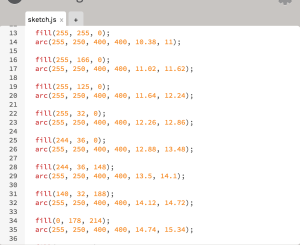

Or project senses the aerial performers heartbeat with a Polar pulse sensor, and sends this pulse wirelessly via two Moteino boards. The pulse is then used in a P5 javascript sketch to effect audio and visualizations.

In this experience, the audience hears the sound of a heartbeat timed with the performer’s pulse. The visualization, also reacting to the pulse, projects from the ceiling onto the performer, surrounding area, and any audience members that have come in close. The resulting experience is intimate, personal and engaging.

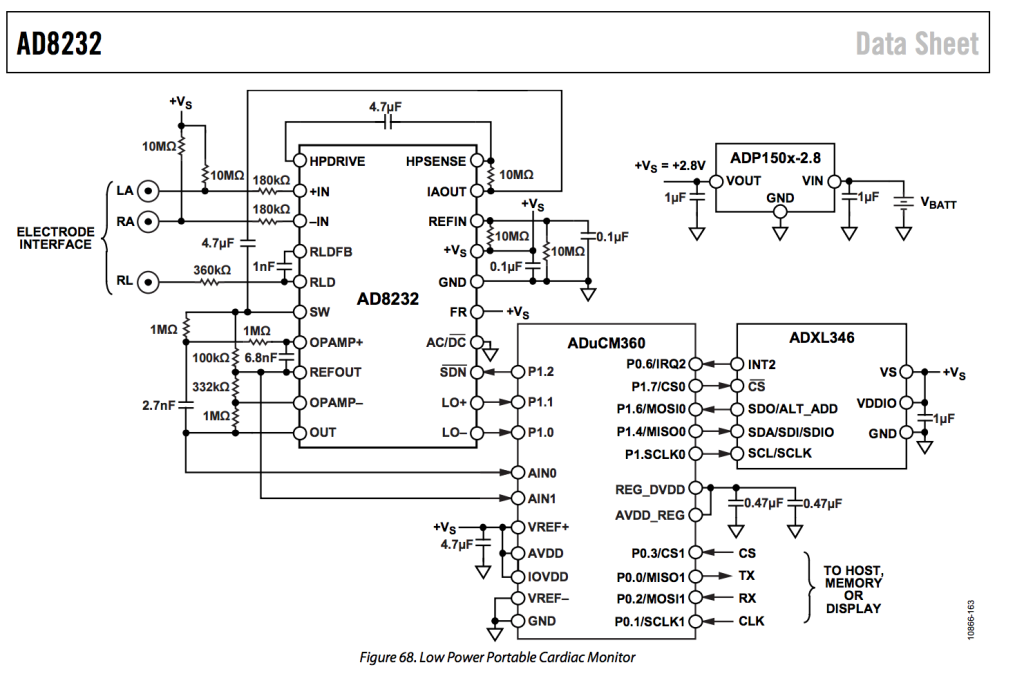

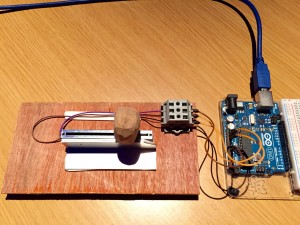

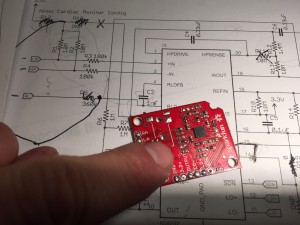

Our method for measuring the heart beat of the performer changed drastically over the course of our testing. The first version we used worked via sticky electrodes attached to the chest and connected to an EKG operational amplifier which amplifies the minute voltages generated by the heart. We quickly ran into noise issues when the performer made any slight movement. After trying some simple signal processing in arduino, as well as modifying our EKG breakout board to include heavy filtering, we found it very hard to get a reliable signal.

After a lot of research into portable EKG units worn by the ‘patient’, I began to learn the importance of using an accelerometer alongside the electrodes in order to filter out movement noise. The first time I heard of such a thing was buried in a youtube video:

Combining the operational amplifier with an accelerometer via a small microcontroller is also in the datasheet for the heartrate sensor.

Trying to implement this kind of digital signal processing ourselves was quickly starting to look too difficult for a project due in 2 weeks!

Eventually we found a product–the Polar T34 pulse monitor that handles this processing. By using this band as our sensor, we were able to get a very accurate heartbeat pulse that was free from noise even during movment.

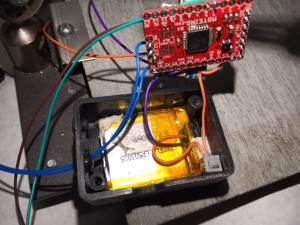

Our final human interface works by receiving a pulse signal from the Polar monitor for every heartbeat, which is then transmitted wirelessly via a Monteino transmitter (over a 915MHz serial bridge) that is worn by the performer. This 915MHz signal is then received by a second Moteino that sends the pulse along to P5 via serial. In order to make sure that P5 sees every pulse, each heart beat is about 320ms long.

Our original proposal included a breath/stretch sensor in addition to the pulse sensor. We thought implementing this would be relatively simple. In reality, the stretch sensor was very sensitive to movement and we were unsuccessful at getting a usable reading.

It took three iterations over about one month to get the pulse sensor working as we intended. We tried an optical pulse sensor, a three electrode EKG heart monitor, and a two electrode heartbeat monitor. All three of these sensors were plagued with electrical noise whenever the performer moved. Finally we arrived at the Polar T34 heartbeat band, which is designed to produce a reliable heartbeat even during times of heavy physical movements. We found this band provided an extremely accurate representation of the performers heartbeat, even when they were moving.

We spent a lot of time working with the the serial communication to get a reliable signal. Once the signal was in P5, we found we had to simplify our sketches significantly in order for them to run reliably. In the future we would likely try to use Processing for visuals.

Our final code for the project can be found on github: https://gist.github.com/lisajamhoury/e8a7de48f8155078efe2

Interestingly, some of our testers had a negative reaction to the sound of the heartbeat played over headphones. We don’t yet understand why some find the sound unbearable, while others find it centering and calming. We will continue to refine the sound and user test to get a better understanding of this sensitivity.